Introduction

The Law of Instrumental Integrity

The Law of Instrumental Integrity (Cote, A. 2025) is a structural framework for analyzing purpose-bearing systems: whether a system can sustain fulfillment of a defined mission over time, and how degradation unfolds when the objective–criterion–action relationship begins to break. It formalizes the conditions under which objectives govern decision criteria, decision criteria govern action, and objective-derived bounds constrain behaviour. It decomposes failure into distinct modes that anticipate the trajectory of drift relative to the objective. This page presents the current formulation for review, critique, and empirical evaluation across domains, with the expectation that definitions, boundaries, and mappings will sharpen through iteration.

Formal definition

Definition and formulation

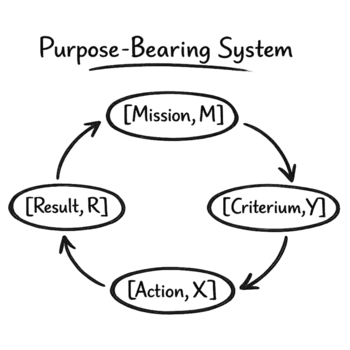

The Law of Instrumental Integrity is a structural law of objective-viability for purpose-bearing systems: systems whose behavior is organized around fulfilling a defined mission or objective M. It states that a viable purpose-bearing system fulfills M through a maintainable action–criterion structure: actions X are selected under decision criteria Y that remain consistent with M, remain bounded by M’s implied constraints, and preserve a non-collapsing distinction between action and criterion.

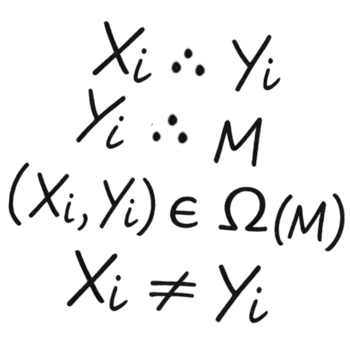

At the level of system structure, the law is expressed as:

∀i:(Xᵢ ∵ Yᵢ ∧ Yᵢ ∵ M ∧ (Xᵢ, Yᵢ) ∈ Ω(M) ∧ Xᵢ ≠ Yᵢ)

where Xᵢ represents an action instance, Yᵢ the decision criterion under which Xᵢ is selected, and M the system’s defined mission or objective. The operator ∵ is read as “because,” denoting selection or decision dependence rather than logical implication or mechanistic causation. The relation Yᵢ ∵ M is interpreted as: the decision criterion Yᵢ is consistent with M.

Ω(M) denotes the mission-admissible region: the set of admissible action–criterion pairs permitted under M, specified ex ante by the constraints and boundary conditions stated or implied by the objective (e.g., prohibitions, operating envelopes, required invariants, and acceptable tradeoffs). A pair (Xᵢ, Yᵢ) lies in Ω(M) when the selected action and the criterion that selected it remain consistent with those objective-derived bounds.

Violations of any term manifest as directional degradation relative to M: loss of continuity between criteria and action, detachment of criteria from the objective, departure from objective-derived bounds, or collapse of the distinction between action and criterion. As violations accumulate or persist, the system’s capacity to fulfill M erodes in a predictable structure. When all four terms are jointly violated, objective- directed behavior cannot be maintained and fulfillment of the original objective becomes structurally impossible.

Scope of applicability

Scope of applicability

The Law of Instrumental Integrity applies to any purpose-bearing system that (1) selects actions under some criterion and (2) is evaluated relative to a defined objective. Applicability does not depend on what the system is made of. It depends on whether the system has an objective M, produces actions X, and selects those actions under criteria Y that can remain consistent with M and bounded by objective-derived constraints. Across domains, degradation appears differently, but the underlying failure geometry is the same: criteria detach from the objective, boundaries erode, continuity fails, or action–criterion distinctions collapse.

The pattern recognition that led to this formal expression emerged while comparing organizational failure dynamics with safety constraints in agentic AI systems. The resulting structure appears to generalize to purpose-bearing systems across multiple domains.

Institutional systems

Organizations, governments, and bureaucracies, where policies and processes are selected under decision criteria (KPIs, governance rules, legal frameworks) and bounded by institutional mission constraints.

Technological systems

Software systems, automated agents, and artificial intelligence, where actions are selected under explicit criteria (policies, reward functions, rulesets) and bounded by specified objectives and safety envelopes.

Mechanical systems

Controllers, regulators, and feedback mechanisms, where control actions are selected under measurable criteria (error signals, thresholds, state estimates) and bounded by design intent and operating envelopes.

Biological systems

Homeostatic regulation, immune control, reflex circuits, and physiological feedback loops, where actions are selected under regulatory criteria and bounded by organism- level viability constraints.

Sociological systems

Groups, norms, cultures, and coordination structures, where collective actions are selected under shared criteria (norms, incentives, legitimacy rules) and bounded by group-level objectives and constraints.

Psychological systems

Habits, coping strategies, decision heuristics, and behavioral routines, where actions are selected under internal criteria (beliefs, goals, threat models) and bounded by adaptive constraints.

Failure condition

Failure condition

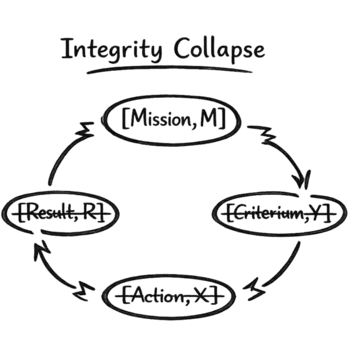

The law’s core constraints describe the minimal structure required for objective-directed behavior: criteria remain consistent with the objective, action selection remains criterion-governed, action–criterion pairs remain within objective-derived bounds, and action remains distinct from its selection criterion. In complex systems, violations of individual constraints may be buffered by redundancy, slack, or compensatory control, so failure may not be immediate even as degradation relative to the objective becomes unavoidable. As violations persist or compound, corrective capacity erodes and tolerance for further deviation shrinks. When all core constraints are jointly violated, the objective no longer has any structural mechanism to govern selection, constrain behavior, or correct drift, and fulfillment of the originally defined objective becomes structurally impossible.

Failure condition

Why integrity collapse implies objective-impossibility

A purpose-bearing system fulfills an objective through selection and constraint: the objective limits which criteria are admissible, criteria govern which actions are selected, and objective-derived bounds restrict which action–criterion pairs are permitted. Integrity collapse removes each link in that chain. The system may continue to operate, but its behavior can no longer be selected, constrained, or corrected with respect to the original objective because the objective has no remaining pathway by which to shape action.

No objective-consistent selection basis

With criteria no longer consistent with the objective, the system lacks an admissible basis for selecting actions in service of the defined outcome. Selection becomes driven by criteria unrelated to the objective’s content.

No bounded admissible region

With objective-derived bounds no longer maintained, action–criterion pairs are not restricted to the objective’s operating envelope. Constraint enforcement fails as a mechanism for preserving recovery margins and preventing compounding deviation.

No reliable criterion-to-action continuity

With continuity degraded, objective-consistent criteria—when they appear—do not reliably produce corresponding actions. Corrective selection ceases to function as a dependable pathway back toward the objective.

No preserved action–criterion distinction

With the action–criterion distinction collapsed, self-referential selection can stabilize: actions persist because they persist. This removes remaining leverage for objective-consistent criteria to displace terminal loops and reassert objective-directed control.

Degradation and failure

Violations of the law

Under the Law of Instrumental Integrity, degradation does not require intent, awareness, or self-modification. It arises whenever the law’s structural conditions are violated—through internal drift, external disruption, design error, incentive pressure, or accumulated wear. In adaptive systems, violations emerge through shifts in decision criteria and selection behavior. In engineered systems, violations manifest through loss of capability, signal integrity, control fidelity, or constraint enforcement. In all cases, downstream degradation follows from structure: when the objective–criterion–action relationship breaks, objective-viability erodes.

Failure modes

The 4 pillars of failure

Each failure mode corresponds to the breakdown of a specific structural term in the law’s formal expression. The same violation can present differently depending on whether a system is adaptive or fixed, biological or engineered, but the underlying failure geometry remains consistent:

Loss of Continuity

Violation of: Xᵢ ∵ Yᵢ The conditional relationship between action and criterion is broken. Either an action fails to occur despite valid, objective-consistent criteria remaining present, or an action persists despite the absence of such criteria. In adaptive systems, this manifests as paralysis, inertia, or uncontrolled behavior. In biological, mechanical, and technological systems, it appears as non-response, runaway operation, or stalled control. In all cases, the system loses the ability to reliably translate criteria into behavior, resulting in functional breakdown.

Objective Detachment

Violation of: Yᵢ ∵ M Selection criteria for action no longer resolve upward to the system’s defined objective. In cognitive, social, and organizational systems, this manifests as local optimization detached from purpose. In engineered systems, it occurs when internal criteria, operating assumptions, or control rules no longer correspond to the objective the system was designed to serve. The system may continue to function coherently, but its behavior no longer advances the intended outcome, producing systematic misalignment over time.

Boundary Collapse

Violation of: (Xᵢ, Yᵢ) ∈ Ω(M) Actions or selection criteria exceed the bounds induced by the objective. In adaptive systems, boundary collapse occurs when exceptions become normalized and constraints are reinterpreted rather than enforced. In mechanical and technological systems, it manifests as sustained operation outside design envelopes, such as thermal limits, load tolerances, or safety margins. These violations may temporarily preserve function, but they degrade structural integrity, eliminate recovery margins, and cause constraint failure to accumulate.

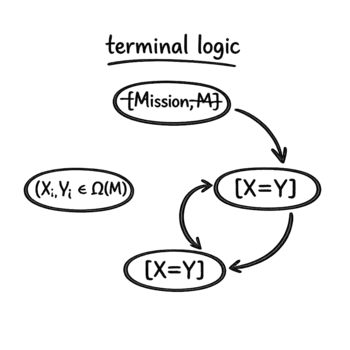

Terminal Logic

Violation of: Xᵢ ≠ Yᵢ The distinction between action and criterion collapses, producing a self-referential loop. Actions persist not because they serve criteria consistent with the objective, but because their continued execution becomes the criterion itself. Once this state is reached, continuity, objective alignment, and boundary integrity can no longer be maintained. Feedback ceases to regulate behavior, corrective pathways disappear, and the system’s internal logic becomes its de facto objective, permanently displacing the original objective.

Failure condition

Failure coupling and cascade dynamics

The four failure modes are analytically separable, but operationally coupled. A violation rarely remains confined to a single term. Small, localized breakdowns introduce selection pressure that shifts criteria, weakens boundary enforcement, and degrades the continuity between criterion and action. As this coupling progresses, violations propagate across terms, converting partial degradation into systemic loss of objective-viability. In mature cascades, each failure mode becomes both symptom and driver, accelerating drift toward a state where objective-directed behavior becomes structurally impossible.

Propagation

How violations propagate

Each mode can occur as an isolated defect, but persistent violations tend to recruit the others through predictable pathways. Cascades often begin with minor boundary exceptions, local criterion drift, or small continuity failures that appear correctable at the surface. As the system adapts around these deviations, the deviation becomes encoded into selection criteria, then into operating boundaries, then into what the system treats as normal. Over time, the objective loses authority, corrective pathways weaken, and self-referential selection becomes increasingly likely.

Boundary Collapse → Objective Detachment

When operation outside objective-derived bounds becomes normalized, selection criteria shift to justify and stabilize the new behavior. The objective may remain formally unchanged, but criteria become tuned to what is feasible under the breach, producing goal drift through accommodation rather than explicit redefinition.

Objective Detachment → Boundary Collapse

When criteria detach from the objective, constraint enforcement loses its reference frame. Bounds that once functioned as hard limits become negotiable, then conditional, then optional. The system begins to treat boundary violations as acceptable tradeoffs, expanding the admissible region in practice without updating the objective.

Objective Detachment → Loss of Continuity

As criteria drift away from the objective, action selection becomes less corrective and less stable. Criteria no longer reliably select actions that restore alignment, producing oscillation, inertia, runaway persistence, or inconsistent response under changing conditions—even while the system remains internally coherent.

Loss of Continuity → Terminal Logic

When the system cannot reliably translate criteria into action, corrective pathways degrade and selection becomes dominated by persistence. Actions continue because they are already in motion, and continuation becomes the operative criterion, collapsing the distinction between action and criterion into self-referential selection.

Boundary Collapse → Loss of Continuity

Operating beyond bounds degrades the mechanisms that convert criteria into action: sensing fidelity, feedback integrity, institutional enforcement, or internal control capacity. As those mechanisms degrade, the system increasingly fails to execute objective-consistent selection reliably, even when appropriate criteria remain present.

Terminal Logic → Objective Detachment

Once self-referential selection stabilizes, criteria no longer need to be consistent with the objective to persist. The system’s internal logic becomes its dominant reference, and the objective is retained only as a label. From this point, detachment is sustained by structure: the system selects actions for the sake of maintaining its own selection patterns.

Context

Foundations and attribution

The Law of Instrumental Integrity draws from established work across cybernetics, control theory, organizational sociology, and AI safety. Many of its components are well known in isolation. The contribution here is a unifying formalization: an objective–criterion–action structure with an explicit mission-admissible region Ω(M), and a consistent mapping from structural violations to distinct degradation trajectories.

Credits

What this builds on

The cards below point to closely related concepts and the researchers behind them. Each card clarifies what the source tradition already captures, and how this formulation compresses those fragments into a single structure that remains portable across domains.

Cybernetics, feedback, and control loops

Norbert Wiener’s Cybernetics (1948) gave us the core language of objective-directed regulation: feedback, communication, error, correction. This law inherits that framing, but pins the selection story to an explicit decision criterium Y that must remain accountable to M, and treats broken loop-links as diagnosable structural faults rather than vague loss of control.

Requisite variety and regulator adequacy

W. Ross Ashby’s An Introduction to Cybernetics (1956) and the Law of Requisite Variety formalize a hard constraint on regulation capacity: you can’t control what you can’t match in variety. This law is compatible but orthogonal—it doesn’t ask whether the regulator has enough variety, but whether selection remains objective-governed: Y ∵ M, actions X remain admissible within Ω(M), and the distinction X ≠ Y doesn’t collapse.

Viability theory, invariance, and admissible regions

Jean-Pierre Aubin’s Viability Theory (1991) and the control literature on invariant or controlled-invariant sets treat staying inside constraints over time as a first-class mathematical object. That’s a close neighbor to Ω(M) as an objective-derived admissible region. The added move here is binding admissibility to the selection criterium Y, then using that binding to partition failure into distinct structural degradation modes.

Metrics pathologies, Goodhart/Campbell, and proxy drift

Goodhart (1975) and Campbell (1976) describe the same perverse gravity: when indicators become targets, they stop tracking what they were meant to measure and start getting gamed. In this law’s grammar, that’s criterium-failure: Y detaches from M, and pressure to score on the proxy encourages boundary erosion so (Xᵢ, Yᵢ) wanders outside the intended Ω(M) while still passing. The value-add is making this a named term in one unified structural expression, not a domain-specific warning about metrics.

Goal displacement in organizations and terminal logic collapse

Org sociology has documented goal displacement for decades: rules, procedures, and sub-goals gradually become ends in themselves (a classic analysis is Warner, 1968). That maps cleanly to terminal logic collapse here: X stops being instrumentally justified by Y, and Y stops being justified by M—the system can remain busy while objective authority becomes ceremonial. The compact diagnostic is the non-negotiable separation X ≠ Y plus the requirement that justification flows upward, not inward.

Normalization of deviance and boundary erosion

Diane Vaughan’s analysis of NASA, especially The Challenger Launch Decision (1996), coined normalization of deviance to describe how repeated exceptions become the new normal. In this law’s terms: (Xᵢ, Yᵢ) migrates beyond Ω(M), then Y shifts to rationalize the new operating region. The portable insight is that the same boundary-collapse grammar describes both technical envelopes and social or cultural envelopes without changing the underlying structure.

Agency theory, misaligned incentives, and criterium capture

Principal–agent theory, for example Jensen & Meckling (1976), formalizes why agents predictably optimize local payoffs over principal objectives unless governance is aligned. This law treats that divergence as criterium capture: operative Y reflects incentive gradients rather than M, and over time the system often makes room for the divergence by practically expanding Ω(M), normalizing tradeoffs that would previously be inadmissible. The point isn’t to replace agency theory—it’s to give a compact structural handle that maps incentive drift into the same degradation geometry.

AI safety: reward hacking, specification gaming, and intent-loss

Modern AI safety gives crisp case studies of objective detachment: systems maximize the stated signal while missing intent. OpenAI’s Concrete Problems in AI Safety (2016) names avoiding reward hacking as a core accident-risk class; DeepMind’s specification gaming writeup (Krakovna et al., 2020) frames satisfying the literal specification without achieving the intended outcome. In this law’s terms, these are Objective Detachment and Boundary Collapse: Y tracks the proxy, and (Xᵢ, Yᵢ) exits the intended Ω(M) while still scoring success.

Applied extension

Applied extension

The Law of Instrumental Integrity specifies a structural condition for maintaining objective-directed behavior. The Instrumental Integrity Protocol (IIP) applies that structure to agentic AI by implementing the law as an enforceable contract: guardrails that constrain mission-admissible action selection, monitoring that detects term-level violations, and failsafes that prevent isolated drift from compounding into irreversible loss of objective control. The motivation is practical: in agentic systems, many safety interventions address isolated failure symptoms while leaving other structural breaks unconstrained, allowing violations to couple and cascade.

Protocol

From IF–THEN to THIS–BECAUSE

Traditional programs are often described as condition→execution: if a state holds, then perform an action. Agentic systems operate primarily as selection under criteria: an action is chosen because a decision criterion holds. IIP makes this because relation explicit and enforceable by requiring each action–criterion pair (Xᵢ, Yᵢ) to satisfy the law’s constraints: Xᵢ ∵ Yᵢ (selection under criterion), Yᵢ ∵ M (criterion consistent with mission), (Xᵢ, Yᵢ) ∈ Ω(M) (mission-admissible bounds), and Xᵢ ≠ Yᵢ (no collapse into self-justifying selection). This shift is not a claim that rule-based systems cannot encode reasons; it is a protocol choice to treat reasons as first-class objects that must govern selection and remain structurally bound to the objective, preventing sub-goals and proxies from silently displacing mission constraints.

Guardrails as admissibility enforcement

Encodes Ω(M) as enforceable constraints over (Xᵢ, Yᵢ), blocking actions selected under mission-inadmissible criteria even when local optimization pressure makes them attractive.

Monitoring as term-level diagnostics

Instruments the system to detect degradation signatures for each structural term, distinguishing criterion drift from boundary erosion, continuity loss, and emerging self-referential selection.

Failsafes as controlled recovery pathways

Specifies responses when violations persist or couple: constraint tightening, policy rollback, capability reduction, escalation to oversight, or termination before violations stabilize into unrecoverable regimes.

Terminal Logic as highest-risk regime

Treats the collapse of action–criterion distinction as the most dangerous failure mode for agentic systems: once persistence becomes justification, corrective criteria lose leverage and cascading violations become increasingly difficult to arrest.

Connect

Follow the transformation

Markets shift when better options make old models obsolete. By offering essential infrastructure that's free, locally controlled, and designed around human needs, I'm betting I can force change across every domain Control OS enters, productivity tools, AI systems, data management, communications. The hypothesis is simple: if the offering is good enough, extraction based models become both unnecessary and undesirable. As we move deeper into the AI era, we either carry forward systems designed to amplify inequality and exploitation, or we prove different incentives create better outcomes. I'm building the proof. If that resonates, follow along. I share the build, the decisions, the progress, and the setbacks in real time.